This work was done as a part of the project component of the course ‘R181-Computing for Collective Intelligence’ during my MPhil. All the code and associated files are also uploaded on Github. The project mainly stems from my interest to study whether colletive agents can learn to cooperate by evolving a unique language and to look deeper into the resultant language to see if it shares any common characteristics with human language. The complete PDF report is available here.

Language allows for communication and cooperation in completion of tasks. However, for communication between agents, human language may not be the appropriate choice. Therefore, I look at allowing the agents to create a language for communication by requiring them to coordinate for task completion. The evolved language is analyzed to compare its characteristics with a few of the characteristics of real-world languages.

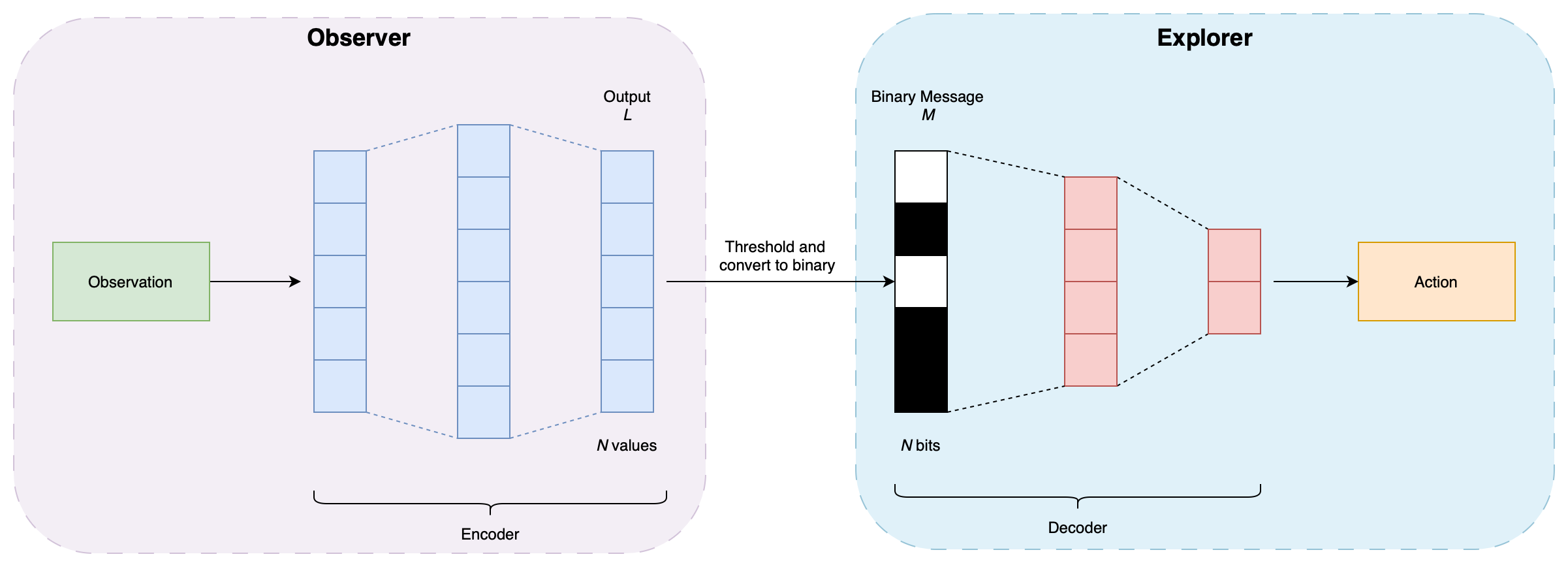

The architecture is an autoencoder style model consisting of a Encoder-Decoder, each used by two agents, Observer and Explorer, as described in the figure below.

The messages passed between the agents are binarized, introducing an information loss that prevents the Observer from passing continuous valued vectors. Due to the bottleneck, the Observer is required to generate concise messages containing vital information. The losses are computed on the binary messages to ensure the feedback is provided on the message and not the intermediate, continuous valued vector.

The Observer can only observe the environment, guiding an environment-blind Explorer who’s only input is the messages passed by the Observer. For the setup, I use PettingZoo environments, Simple and SimpleTag.

Findings

Communication

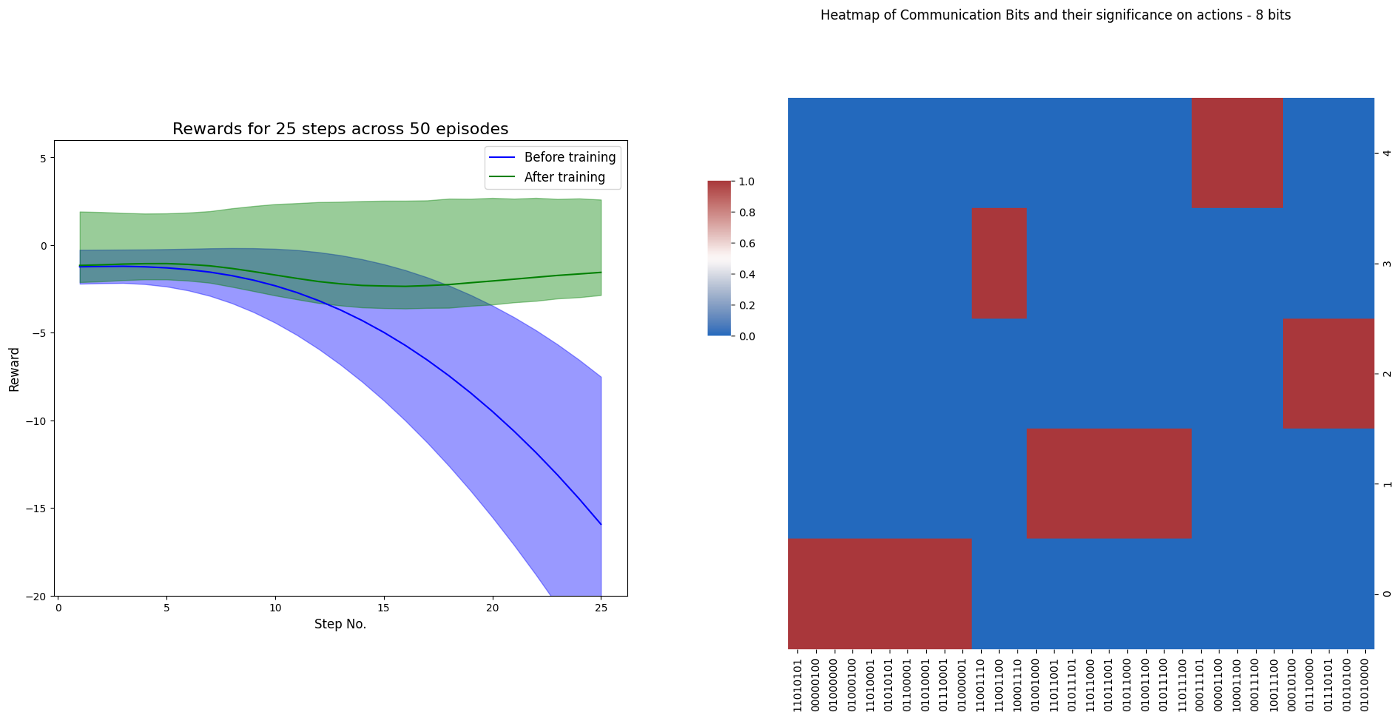

The agents achieve convergene to a notion of a communication protocol and are able to solve the environment. A communication analysis is performed to identify the unique combination of bits and the action/meaning they convey to the Explorer. The figure below shows the reeward progression during training and the heatmap of the bit sequences and the action they encode. Note, there are 5 possible actions in the environment.

Another interesting finding from the heatmap is the presence of “synonyms” where multiple sequences can encode the same action, along with the non-exhaustive

Stability of communication

Human languages are generally living and evolving, with a continuous change in the meaning, structure and components. In this experiment, I try to analyse if the language developed by the agents also has this feature, allowing for changes in the language either in terms of new bit sequences or modification of the actions that a bit sequence encodes. In order to test this, the experiment is ran for more epochs after the agents achieve a stable communication. The learning rates are a parameter that controls how fast the language evolves. From the results, it is evident that training for more epochs leads to changes in the communication protocol and a higher learning rate speeds up this process. Training further leads to addition of new sequences along with a change in the meaning of a few sequences.

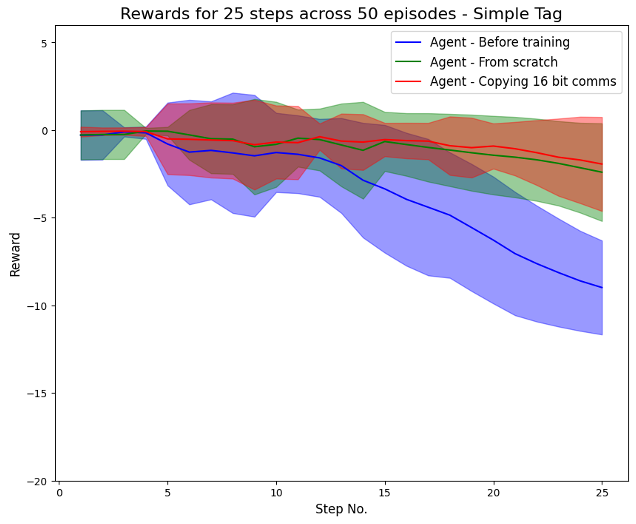

Generalizability of communication

Another characteristic of languages are that the same “phrases” should apply to different environments. To test this, a trained model is used on a different environment and the sequences are checked if the actions remain the same. It is found that this theory does not hold for the communication model where only ~18% of the sequences retain their meaning in a different environment although ~60% of the sequences are carried over in the new environment.

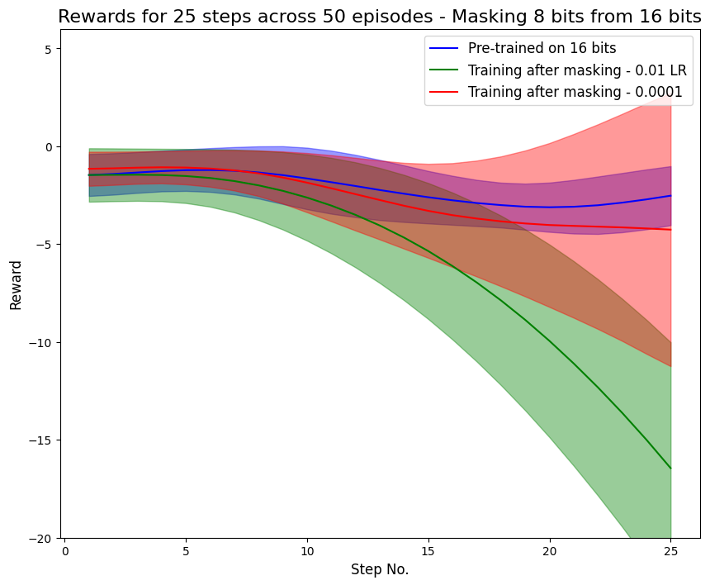

Communication breakdown

This experiment looks to test the adaptability of the agents to overcome a “communication breakdown”. To simulate this, the model is trained on an environment before masking a few bits of the message on subsequent epochs. Half of the bits are masked to test adaptability and while the average reward over different runs is recovered, the masking still leads to a high variance in the reward, indicating that not all runs are able to adapt to the masking. Further training does not reduce the variance in the rewards.

Identical sequences for actions

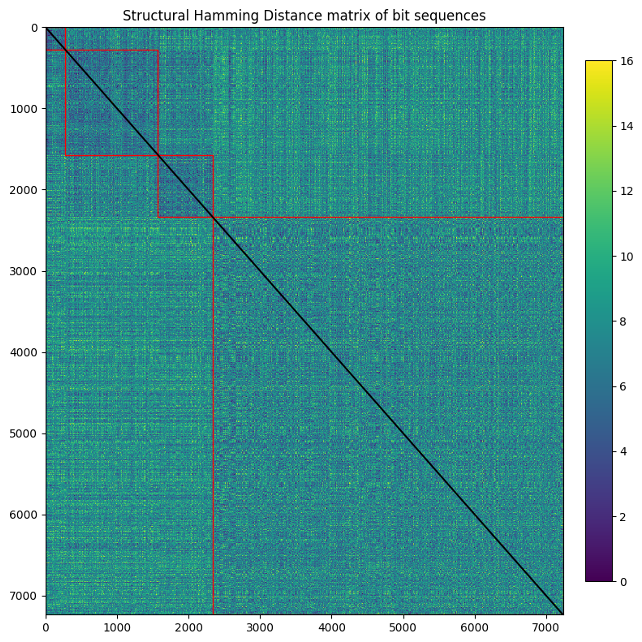

From the results collected, I look at analysing if sequences encoding the same action are “similar” to sequences that encode different actions. To do this, the Structural Hamming Distance metrics of sequences is computed and is plotted as a heatmap. The SHD computation results in intra-action and inter-action SHD, where the intra-action SHD is for sequences encoding the same actions whereas inter-action SHD is for sequences encoding different actions. From the figure below, the regions bounded inside the red regions are intra-action SHDs while those outside are inter-action. Observing the heatmap, we see that the intra-action SHD regions are slightly darker, indicating a lower SHD between sequences and therefore a higher similarity.

To further confirm this, running a t-test concludes that there is a significant difference in between the SHD of the two groups with a p-value of $0.016$.

There were a few interesting findings from this project, the full content is available in the PDF.